Technical SEO Best Practices

The whole point of SEO is to maximize your optimization. That includes Technical SEO best practices! Technical SEO is just as important as other aspects of SEO. Why? Because if you’re not doing it, your competitors are!

Technical SEO is a very important step in the whole SEO process.

It relates directly to your website and server and should be done before Off Page SEO. If there are problems with your Technical SEO it will interfere with other SEO efforts.

There is no point diving into Off-Page SEO if there are issues on the technical side. When your traffic starts coming in you want to make sure any issues are corrected and also Google can crawl your pages without generating errors.

In this post, I will get into what the best practices are to follow while performing a Technical SEO Audit on your site.

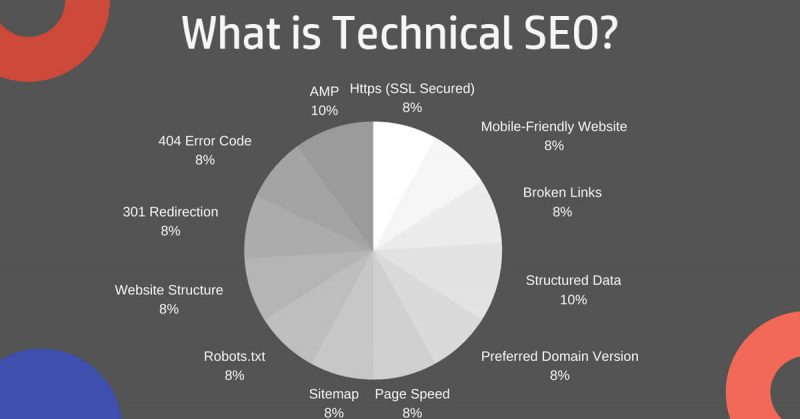

What is Technical SEO?

Technical SEO involves optimizing your website for crawling and indexing and eliminating server errors. It’s basically playing nice with search engines to allow them access to your site and to index the pages you want to be indexed. It has nothing to do with your content or backlinks and everything to do with your code, website speed, server and mobile friendliness.

Here is a quick rundown on key Technical SEO best practices.

- Optimize Robots.txt

- Optimize Your URL Structure

- Canonical URLs

- Optimize 404 Page

- XML Sitemap

- SSL and HTTPS

- Website Speed

- Mobile Friendliness

- Specify a Preferred Domain

- Navigation and Site Structure

- Breadcrumbs

- Structured Data Markup

- Pagination and Multilingual Websites

- Multi-Lingual websites

- Google Search Console and Bing Webmaster Tools

What is robots.txt?

Robots.txt is simply just a plain old text file (*.txt). It is stored in the root directory of your website and informs search engines about which pages you would like indexed and which pages you do not. You can also block your entire site from being crawled and from specific crawlers.

If you are having indexing issues you may want to check your robots.txt and look at any disallow rules. If you get an error in Google Search Console like this; Submitted URL blocked by robots.txt. It means you submitted the URL for indexing but Google cannot crawl it due to a disallow rule in robots.txt.

URL Structure

Separate words in URL’s use – (dash) and not _ .

Make them short but descriptive with keywords.

Use lowercase characters only.

Stick to using only necessary words that describe the content on the page (keywords).

Do not commit the offence of stuffing keywords in your URL’s.

Canonical URLs

What are canonical URL’s?

Google is smart but sometimes, not that smart. When you use HTTPS (SSL), which you should be using at all times. In Google’s eyes, this essentially creates two identical pages of each page on your site. They see one page as this, http://example.com/samepage.html and the other as https://example.com/samepage.html They are however the same page and furthermore, in your website directory there will only be one-page present, one page that you created: samepage.html

To declare to Google which version of the page you want to be indexed you can add this to the header of your HTML page: <link rel=”canonical” href=”https://example.com/samepage.html”>. This tells Google to index the https version and ignore the http version.

Google Search Console is a great tool for managing your Canonical URL’s.

404 Error Page

404 error pages are pages that are displayed to your users when they click on a link to a page that no longer exists. Without this error page and message on the page users might close your website because they become confused with what has happened.

404 pages should have the same structure as your other pages. For example, the menus, style and footer should be the same as the rest of your pages. There should be a message telling them of the error and in a friendly way. There should be options for them to navigate to other pages. It should be very simple for them to get off the page and on to other pages. Like as easy as clicking on a “home” link.

XML Sitemap

Search engines use sitemaps to navigate your website. A sitemap.xml is basically listed every page on your site you want to be indexed. For the important pages, there is no need to list, for example, 404 error pages.

It’s important you submit your sitemap to search engines. They will eventually find it but submitting it to them gets the ball rolling. With Google, you can do this using Search Console and for Bing, you can use Bing Webmaster Tools.

Equally important is to maintain your site map and keep it up-to-date. When you create a new page, you must edit your sitemap to add the new page. If you use WordPress this process is automated.

SSL certificate – HTTPS

SSL (https) should be on every website, plain and simple. When you link out to other websites you also want to be sure they are https.

Without https Google will alert users that your website is not secure. When a user finds your site on Google and they click on the link, if you do not use https they will first be presented with a warning page from Google. The user won’t even see your website! Google gives them the option to visit your site but strongly suggests they don’t.

There are several types of SSL certificates you can use but for a basic website, it is fine to use a certificate from letsencrypt.org. You will need to install the certificate yourself and renew it after a few months.

These days you will also find many hosting companies offer SSL for free on your primary domain name. This is obviously the best route and it is automatically configured.

In any event, never go without SSL (https). When users are alerted by Google of the security issues they will just back out and visit another website.

Website Speed – Core Web Vitals

Website speed is now more commonly referred to as Core Web Vitals, in the Technical SEO world.

It is generally most important when it comes to optimizing for mobile devices but also plays a role in desktop optimization.

It’s also will help with the crawling of your website. The faster your website is the faster Google can crawl it. This lets google crawl more sites in less time and it actually saves them money.

This what you want to look at when optimizing for speed

Optimize your images for mobile devices. Use the correct size in your HTML code (height and weight).

Sometimes it’s is ok to use a lower resolution. You wouldn’t want to go too low though if you are, let’s say a photographer. More likely you would want high resolution.

You can always use 2 separate images, one for mobile and the other for desktop. For example, for your header image, you can use a large image for desktop and reduce the size for a second image that is the same but will be displayed only on mobile devices.

Use a CDN to serve your images

Defer loading of images and scripts using “defer” in your html code. With WordPress you can use a lazyload plugin for images.

Use asynchronous javascript loading

Minify your CSS, JS and HTML code

Use a server that is capable of handling your traffic with lots of room to spare

Configure your server to use the latest PHP version. (give some time before updating to new versions).

WordPress is the devil when it comes to optimizing for speed. The CMS itself bogs things down and some themes a hopeless when it comes to speed.

Take inventory of your plugins and lose the ones you do not need. Also, be sure to keep active plugins updated.

Use caching in your ht.access or utilize a plugin to do this for you. When you set caching rules your user’s browser will save the rule and when they return to your site they are served with a cached page.

Mobile Friendliness

Mobile friendly is a top priority for Google and that is because it gives users what they want. Users want to browse websites with ease on mobile devices. They want pages, for the most part, to load under 3 seconds.

Google also has an index specifically for mobile devices. One index for desktops and another for mobile. Their focus is on mobile though and the desktop-only version is on the way out. They call their web crawler Googlebot and when Google bot comes crawling, he uses the smartphone agent to check your site out.

That said there are still and always will be plenty of people using their desktops. They are faster, easy to use and a lot of websites are just more enjoyable to view on a desktop.

For SEO what you want to do is have one website for both mobile and desktop. The same content, pictures and videos but it’s responsive and adapts to different screen sizes.

If you are curious about how your website matches up to Google’s standards, use this mobile friendly test to see your score.

Mobile friend tips

Tap targets at least 8px apart (navigation/buttons)

Here are some mobile-friendly tips that apply if you have one website on the same domain. Or, in other words, one website that is displayed on both desktop and mobile. Which is the way you should have things set up.

Optimized images resized for mobile, responsive

Page load speed. Use a CDN or ANYCAST.

Text that is too small. You don’t want to go below 12px.

Set your viewport correctly. If you were adding code to an html page you would add: <meta name=”viewport” content=”width=device-width, initial-scale=1.0″ />. Some will suggest using the “user-scalable=no” attribute but I disagree. Adding the user-scalable=”no” will not allow visually impaired users to zoom in. It’s for accessibility reasons only and will actually bring down your overall accessibility score.

Responsive design – This is not optional.

Avoid interstitials on mobile, completely. Desktop is still ok but be careful with their use, how they are displayed and give an easy way for users to close the window.

Specify Preferred Domain

You need to specify your preferred domain. This lets search engines know which version of the domain is primary. Once you select your primary version you will want to stick with it for the long term.

All websites are accessible using www in the domain name and without www. If users type www.example.com or example.com into the browser they both take you to the same website.

For humans, this is not an issue and the end result is the same, it gets them to the website they desire. But for search engines, they are more complicated and as odd as it seems they do not see them as the same.

Because search engines see them as two different addresses you will run into indexing and duplicate content problems. This will result in your pages not living up to their ranking potential.

What do you do? You need to decide whether you want to go with www or lose the www. Once you decided you must remain consistent and stick with it for the lifetime of your website.

It may seem like a difficult choice to make but it’s not. Selecting one version over the other will not impact your SEO in a negative way or a positive way. It’s a matter of personal preference and also how you want your domain displayed in search engines.

The main point here is to inform search engines of your designated version and to stick with it.

How do you do this? You can edit your ht.acccess file with an entry like this:

RewriteEngine On

RewriteCond %{HTTPS} off [OR]

RewriteCond %{HTTP_HOST} ^www\. [NC]

RewriteCond %{HTTP_HOST} ^(?:www\.)?(.+)$ [NC]

RewriteRule ^ https://%1%{REQUEST_URI} [L,NE,R=301]

That directive tells search engines and browsers you’ve selected non www as your primary, example.com. Now if a user types www.example.com into their browser, they will automatically be redirected to example.com. It stays consistent!

Navigation and Website Structure

This technically falls under UI but does cross paths into Technical SEO best practices. Google looks at the overall structure of your website when analyzing individual pages and the entire site. Overlooking the structure of your website can do damage to your rankings.

Google wants to serve its user with user-friendly websites. They put a great deal of money into this research and give their users what they want. Users want easy navigation, it’s as simple as that. This rings even more true on mobile devices. You want your tap target to be spaced at least 8px apart.

Breadcrumb Menus

Breadcrumb menus allow users to navigate your website with ease, without having to press the back button. They also serve to guide search engines through the structure of your website.

With a WordPress website, this is as simple as enabling breadcrumbs.

Structured Data Markup

Structured data markup lets Google know what type of content is on a particular page. It basically takes the guesswork out for crawlers and helps them understand exactly what the content is, in a language they understand. The key factor here is Google uses structured data to display content from your pages in its index. How is structured data related to technical SEO?

Structured data has to do with the content of a website and keywords. It relates to technical SEO because the markup code needs to be added to the code of web pages. If you are using WordPress, it can be as simple as selecting the Structured Data type and the CMS will take care of the rest.

Structured Data main uses:

FAQ pages

Q&A pages

Articles

Recipes

Events

Job Posting

Local Business

To learn more about Structured Data it’s best to get the finer details straight from Google.

Pagination

Pagination is used when you want to break up a long page into multiple shorter pages and when you enable paging in your category pages.

To avoid duplicate content issues use make use of rel=”next” and rel=”prev” links. This tells search engines that all of the pages are related. They are treated as one page by crawlers.

Multi-Lingual websites

If the content on your website is in multiple languages, use the <hreflang> tag in your HTML code inside the <head> tag.

<hreflang> will inform Google of the language and helps them serve the right page to the correct geographical location.

Google Search Console and Bing Webmaster

Both these tools will help manage your SEO for their own respective search engines. Obviously, Search console gets far more use than Bing Webmaster. But in SEO you want to go all out 100%, so Bing should still be on your radar, at least. Both will help keep up to date with Technical seo best practices.

Both tools let you know exactly how your website is performing. Pretty much all the data you need to analyze your website in the SERPS is in them both. Keywords that Google relates to your site, the position of them and how many clicks they get.

You can submit URLs, sitemaps and check to see if your pages have been indexed. If one has not been indexed but you submitted it, they will tell you it has not been indexed and why. Figuring out the issue can sometimes take some. You might need to dig and test a lot but knowing it has not been indexed is extremely valuable.

Bing Webmaster came out with a great tool called Clarity. Using this tool, you can fine-tune your user experience. It will actually show you how users scroll down the page, where they stop and pressure point areas from taps on mobile devices.

The Short and Skinny

As you can see, adhering to Technical SEO best practices takes some work. It can however get much more complicated, especially with large, script/plugin heavy sites.

It is not something that you should ignore and is just important as other aspects of SEO. Always make sure your Technical SEO is in check and always make it one of the first things you do. When users start to flow in you want everything ready for their visit.